How can we help you?

Click on the section to view help questions and answers:

Periodically checks the accuracy of the results

Videos and Tutorials

Questions and Answers

First Steps to Implementation

The administrator is responsible for managing the relationship with ControlLab, keeping the registration data up to date, managing and delegating tasks to the Online System, receiving and distributing materials and correspondence, ensuring deadlines and analyzing results.

One of the main tasks is to promote the dissemination of information, starting with the purpose, operating rules and evaluation criteria of the PT. For this, it is important to ensure that all laboratory stakeholders read and understand the Gibi Quality Control information, the Participant Manual and the “Questions and Answers” available on the site.

To manage the day-to-day of the program there are the warning emails and the homepage of the Online System, which describes the rounds in progress with the deadline for reporting results and recently published evaluations.

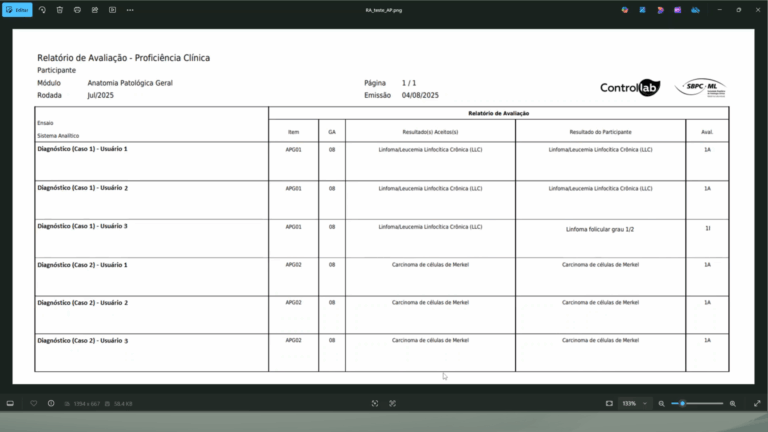

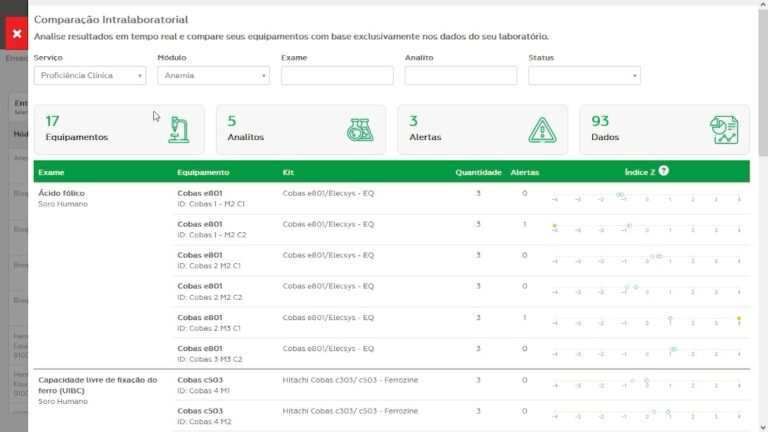

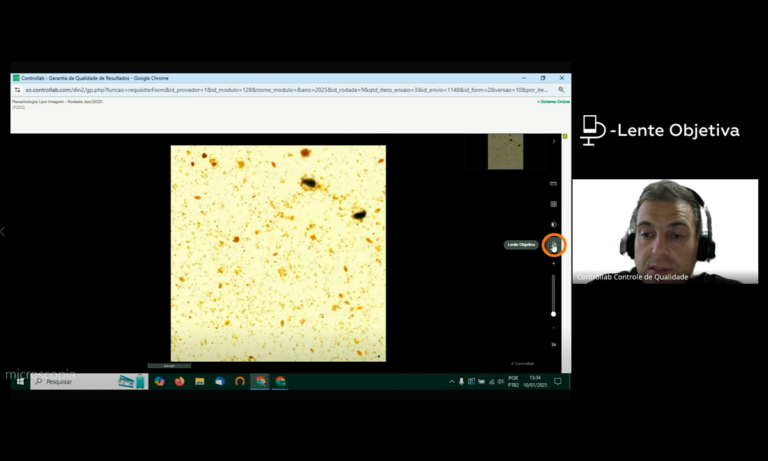

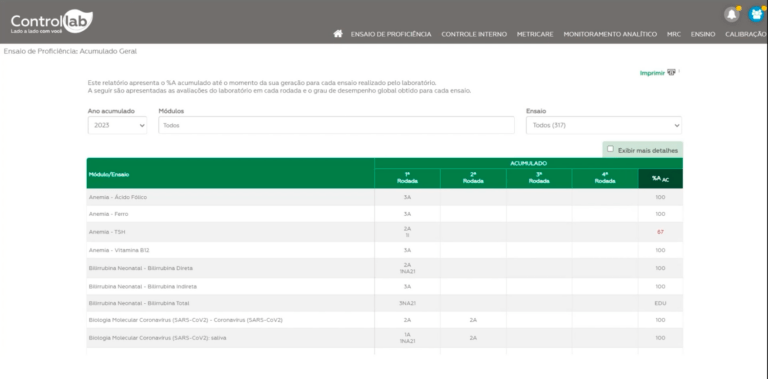

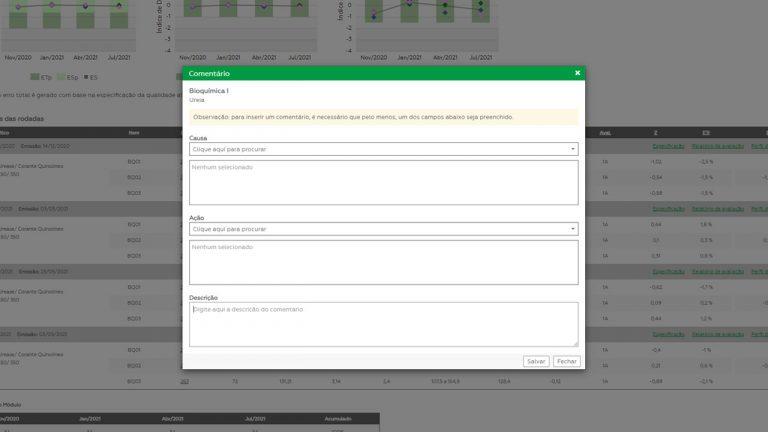

For the monitoring the evaluations, there is the management report and the general accumulation. It is important to conduct a critical analysis of the results together with those involved and analyze the causes for inappropriate results immediately, based on the evaluation report, results profile and internal documents/records. To help you in this step there is the “Diary of Proficiency”, available in print format and use in the Online System (Education).

A new user always has doubts about the operation of the service and its responsibilities. For this reason, the first orientation is to read the Participant Manual, which explains how the program works, and the “Questions and Answers”, which clarify the participants’ most common doubts.

The main care is to run the control correctly so that the evaluation represents exclusively the performance of the routine and so that it is not contaminated with errors related to the quality control itself.

The main precautions include:

1. Read and follow the instructions for using the materials to avoid misunderstandings in the handling, since there are some procedures that differ from those adopted in routine patients;

2. Reconstitute freeze dried material with reagent water and with calibrated pipettor support, paying attention to the volume indicated on the label, since each material has a specific volume and errors in this step cause damage to the evaluation;

3. Use reconstituted material immediately to avoid variations resulting from improper storage;

4. Give special attention to dilutions and consequent calculations to avoid reporting erroneous results;

5. Check the need to convert results to the unit adopted by the program and perform the conversion correctly (see “Why do I have to convert my results to the unit adopted in the PT?”);

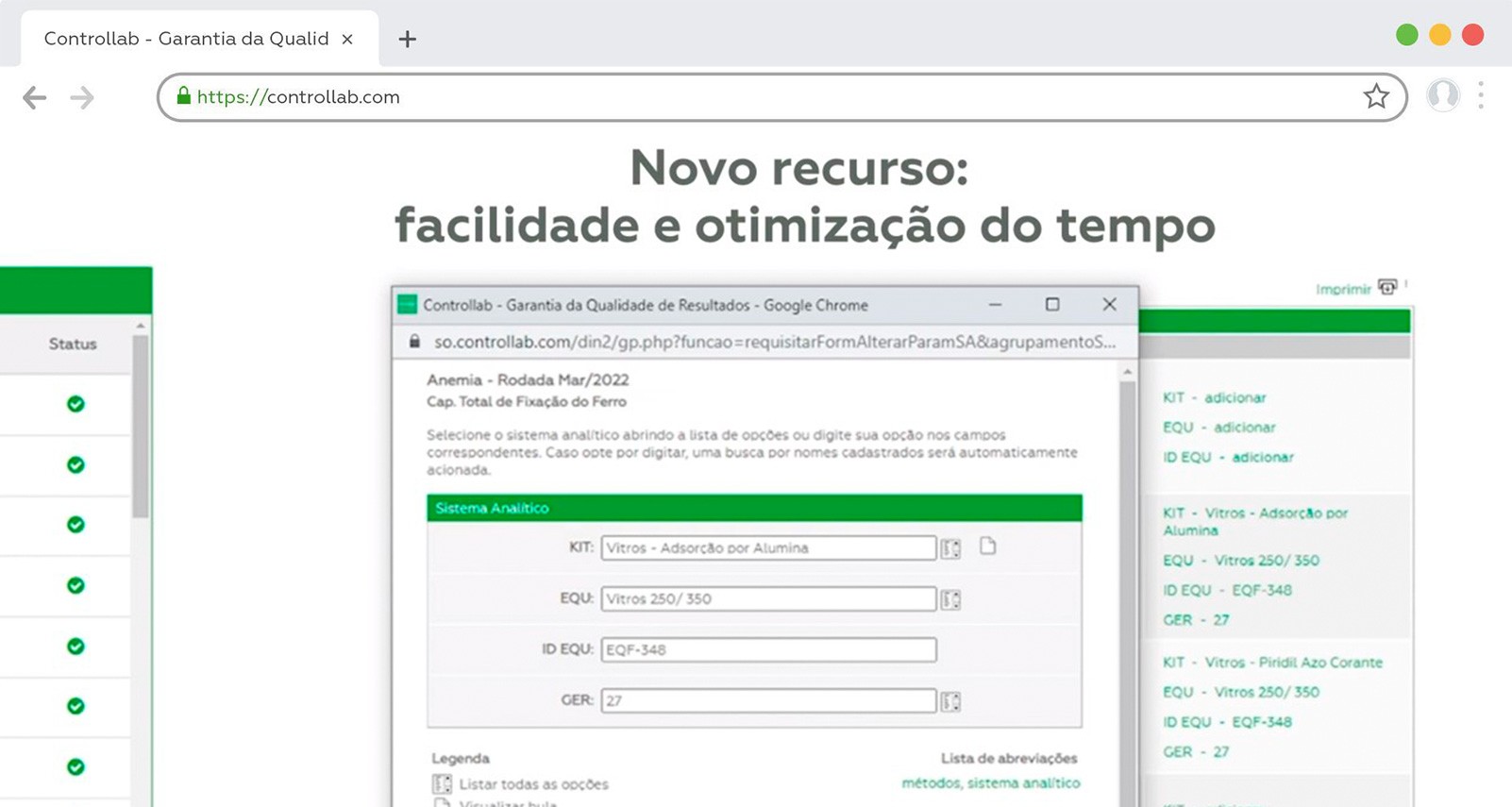

6. Identify the analytical system correctly (reagent, method, equipment, etc.) and report all requested information. According to the test, the absence of a data referring to the methodology may make its evaluation unfeasible;

7. Be aware of the response deadline and e-mail warnings (see “What happens when I stop responding to a round?”);

8. Contact Controllab immediately if you have any questions.

Although the modules are quarterly, participants with monthly payments split the amount. Thus, the invoice describes all the modules in which the participant is enrolled, and is charged the amount corresponding to the monthly apportionment. This is done so that the laboratory has a value for payment always equal, with greater ease of control.

Operation

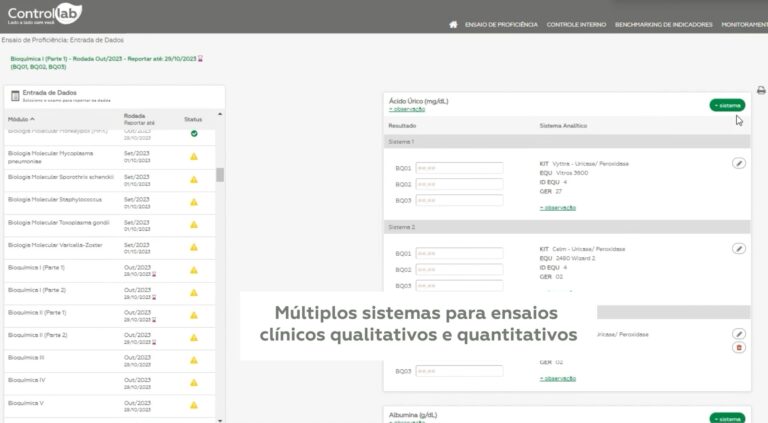

It’s simple! Check if the related form has the option to report this situation in the “+observation” field.

Form with the option “+observation”: select the alternative compatible with the laboratory scenario “Not performed: Not offered” or “Not performed: Outsource”. When these alternatives are checked, they are continuously applied in the form and the field for the test results reporting is disabled in the informed round and in the following rounds. To enable the results reporting for the marked test again, it is necessary to unmark the observation and apply.

Form without the option “+observation”: the laboratory must not respond to the test not performed or outsourced. You must leave the field blank.

As the modules of the PT (proficiency testing) are composed of test groups, it is foreseen that one test or another is not part of the participant’s routine.

If you outsource, do not send results that were not obtained by your laboratory, or, otherwise, it will appear in your evaluation and certificate as if it had been performed by your laboratory.

Each participant should only report results obtained in his routine, should not report tests performed by third parties or other technical unit of the laboratory. Just as you should not exchange information with other laboratories. This is because:

1. The objective of participating in the program is to have the opportunity to identify the good progress or not of each participant processes. When the results are obtained by another, there is no benefit.

2. When duplicating the result of a single laboratory, a trend is inserted in the result group that is not real, which may prejudice the statistical analysis and the evaluation of the other participants. The statistical model adopted presupposes only one result of each participant, which individually contributes to the determination of the target value used for the calculation of the evaluation range.

3. The certificate of proficiency and other documents proving participation in the program are issued to the enrollee in the program, which means that all the tests and results are executed by the enrollee.

The modules of the PT (proficiency testing) are quarterly to meet statistical requirements and ANVISA/REBLAS. The purpose of the proficiency testing is to identify errors, especially systemic ones. However, it is only possible to conclude whether an error is systemic (repetitive) or random, when at least two different items are tested.

With this, the first prerequisite is: never send a single sample per round. If we chose to submit monthly, we would have at least 24 items per year for each trial/module, and this would make the program much more costly.

On the other hand, there are no effective gains that justify such frequency and quantity of materials. Globally, 10 to 20 test items are adopted, with a common periodicity of 2 to 4 months.

But this rule presupposes that the laboratory adopt effective internal controls with more frequency in its routine (daily, between batches etc). Do not change internal control with external control. The first one is to verify its reproducibility and small deviations; already the proficiency test is mainly directed towards accuracy.

The rounds are sent according to the annual calendar, previously defined and distributed to the participants. The rounds are usually sent in the first half of the month, with some adaptations for the current year and holidays. Therefore, it is necessary the annual calendar available on the internet (website and online system).

Users of the Online System also receive an email notification of the round referral.

Not necessarily. Each module has its materials (test items), which can be:

• unique to more than one module (such as Biochemistry and Basic Hormones: all tests are dosed on the same item);

• unique for all tests of a module (as in Tumor Markers: an item for analyzing all markers);

• Individuals per test (as in Molecular Biology: one item for each type of test – HBV, HCV, and HIV);

• Different for test groups (such as in Cell Count: one material for global counting and one for differential);

• Items are labeled with an individual identification (e.g. BA01), and response forms (printed or online) repeat this identification for the tests to which the item corresponds.

This distribution is always the same and repeated every round, so that the participant becomes familiar with the system. Any change is communicated to the participants.

Results Report

In order to make a statistical study and compare its results with those of the other participants of the PT (proficiency testing), it is necessary to adopt a single unit of measurement. Thus, we always seek to adopt the unit most used in the market.

To compare the results of different participants and to promote statistical calculations, it is necessary to set a default number of decimal places. Thus, a number of decimal places are chosen and/or relevant for the analysis of results.

The parameters calculated manually from multiple systems (using other parameters not directly related to the analysis at issue) are evaluated in an educational way in the program. These parameters do not present values obtained directly from the dosages, accumulate uncertainties due to the different variability contributions of the raw results and do not have the reliability required for an evaluation.

This “non-achievement – NR” counts towards your evaluation of the PT (Proficiency Testing) and must be recovered at the end of the period, with the special round. But attention! For tests that are not routinely performed, the process is different. See the answer to “How should I proceed with tests that I don´t perform/outsource”.

The rounds are periodic (quarterly, half yearly) and have a reduced number of test items (when compared to the volume of patients treated by the laboratory). Therefore, it is very important that the laboratory responds to all rounds and tests in its routine.

A non-participation is only paid when the laboratory has stopped activities, such as instrument breakage or lack of kit. In this case, it is necessary to inform Controllab. This allowance can only occur once a year, to maintain the annual representativeness of its results. See response to “If you can’t answer for lack of kits or broken equipment, what happens?”.

If, during the entire period for performing a round, the laboratory has its activities stopped due to operational problems (such as equipment downtime or lack of kit), check if the form related to the inoperative analytical system has the option to report this situation. in the “+ observation” field.

Form with option “+observation”: select the alternative “No inputs at the moment or EQU in maintenance/unavailable”. When this alternative is checked, the request is processed and the NPJ application will be automatically released along with the evaluation process.

Form without option “+observation”: the laboratory must contact Controllab to register the No Justified Participation (NPJ) request.

Thus, Controllab will be able to deduct this round from the laboratory’s score. However, this procedure cannot occur more than once a year, as the sampling of the Proficiency Testing would no longer be representative for the certificate.

Evaluation

Evaluations are released an average of three weeks after the deadline for sending the results. New policies are being studied and implemented at all times, to increasingly reduce this evaluation period.

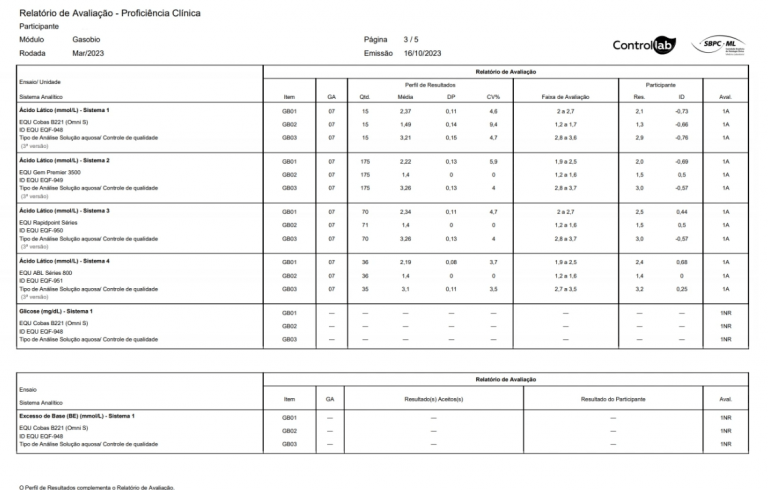

The evaluation process includes (1) receipt of the results, (2) statistical and technical analysis, (3) customer feedback analysis and (4) generation / access to evaluations.

In receipt of results, we are increasingly restricting the granting of increased response time to minimize the impact on the release of evaluations. In the analysis phase, the process has been optimized without giving up the analysis of qualified professionals and consulting the advisors in addition to the statistical treatment. These policies are to ensure a more thorough analysis and more reliable evaluations.

Customer feedback analysis occurs simultaneously with the statistical and technical analysis of the data and must be done completely for the release of the evaluation, since it may contain important information to define the evaluation. However, the volume of comments grows every day (ten to twenty times greater than when implementing the online system), with different information (see ‘When I comment on response forms do you respond?’) and often requires a lot of time in the evaluation process. Actions for the best use of the ‘feedback’ field and reduction of frequent questions have been adopted to minimize this impact.

The generation and access of the evaluation are already immediate since 2004. Once released, it is available to consultation in the Online System.

A delay in the evaluation is usually associated with some atypical performance in the data that requires a more complex analysis, such as material reanalysis (material quality control), contact with users / manufacturers or conducting research and consultation with third parties (other advisors).

Quality control materials are previously analyzed. They go through a quality control that aims to approve them regarding homogeneity and stability. Meanwhile, the evaluation of the participants’ results is the statistical model most used by the proficiency testing providers. This is because it has a consistent volume of results to evaluate accuracy and allows its comparison in a similar analytical system, since the control made by the provider does not cover all existing analytical systems.

However, it is important to note that a comparison is made between participants’ results and quality control to release an evaluation. Controllab always seeks consistency of these results so that there are no mistaken evaluations.

A result may not be evaluated because:

(a) didn’t form an evaluation group (less than 5 results);

(b) the evaluation group presented high variation;

(c) by determination of the advisory group, this decision is described in the document “Results Profile”.

In some specific situations, it is possible the evaluation in groups with less than 5 results, as statistical consistency of the data is verified.

There are a few possibilities:

1. Test with high variation: the results of the participants do not form a consistent statistic, presenting high variation. The option is to not evaluate based on this data.

2. Tests with predictable results: some analytes are only available (human matrix etc) in normal concentrations, and it is not possible to change them synthetically. In this case, they do not meet the requirement of proficiency test of “materials with unknown results” and no evaluation is done.

3. Insufficient number of evaluations: 8 to 16 items per year are sent according to the test, if more than 50% of the tests have not been evaluated (see the question “Why sometimes my results are not evaluated?”), the option is to not evaluate because the volume of evaluations is not representative for the year.

Certificate and related

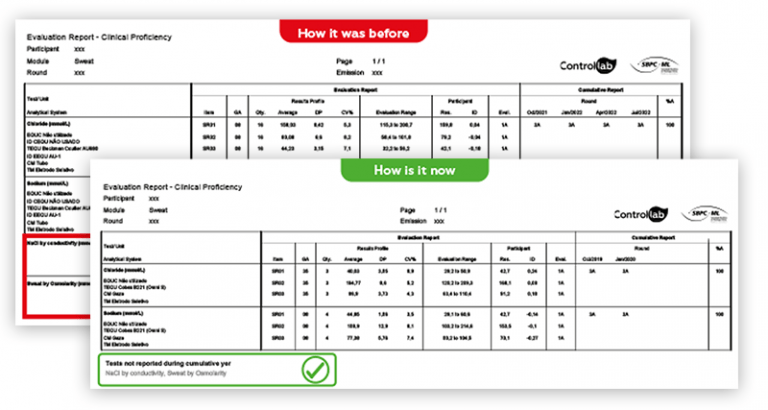

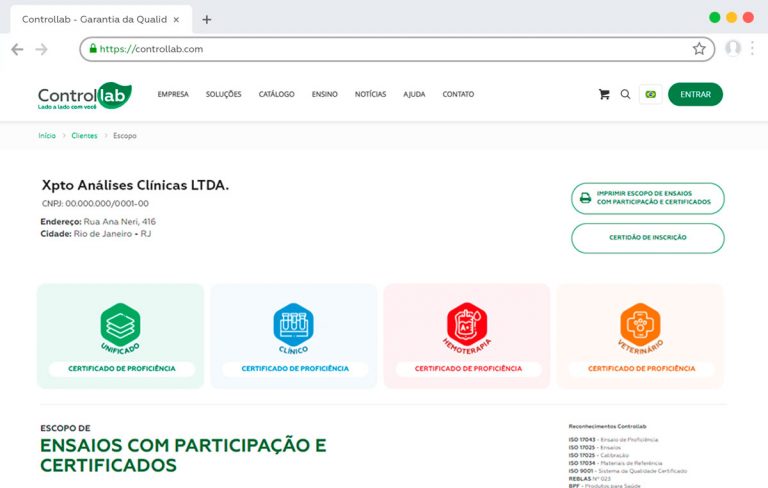

The certificate lists all tests with performance higher than or equal to that defined by ANVISA, in procedure GGLAS 02/43. We define these tests as “adequate” because they have reached the minimum acceptable level of performance.

The certificate also presents educational tests (see ‘Why are some tests classified as EDU?’). Inadequate tests, with less than acceptable performance, is not listed on the certificate. The “Management Report”, available for consultation in the Online System, maintains an updated list of inadequate tests.

It monitors systems analytical performance with each routine.

Videos and Tutorials

Questions and Answers

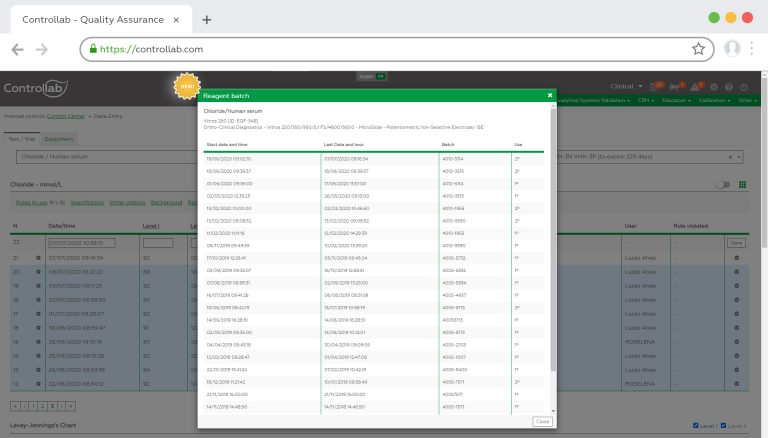

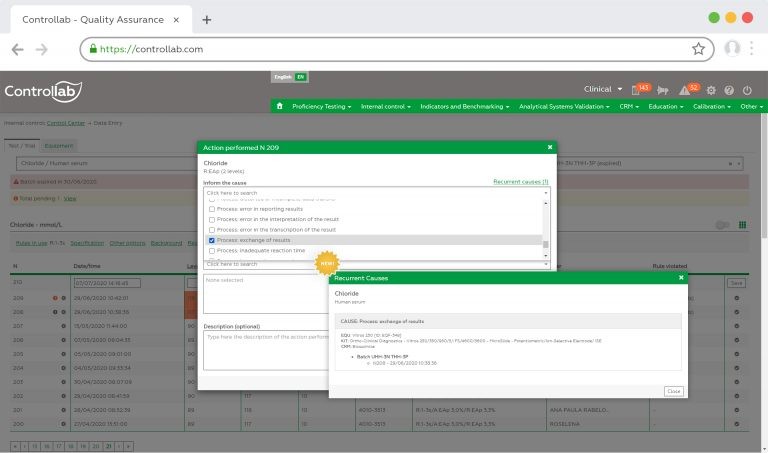

Internal Control

The daily reconstitution of the IC (internal control) avoids the propagation of reconstitution errors and allows stability with less dependence of the storage conditions. Therefore, reconstituting daily, without dividing into aliquots is the best way to use it.

After opening and reconstitution, the control material is subject to variations and contamination, in the same way as patient materials. Thus, the user who chooses aliquot must be very careful with the reconstitution and storage of the material.

The use of two levels of control has already been proposed in GCLP – Good Clinical Laboratory Practices, CLIA’88 (American law) and in accreditation processes. This is because studies point to a greater efficiency of the process of control with multiple levels that allow greater process traceability and greater coverage of the measuring range.

The use of only one level of control basically requires rejection when a control result exceeds ± 2DP, for example. This leads to 5% false rejections for 1 daily reading, 10% for 2 daily readings, and so on. And every false rejection generates rework, costs, and does not help to know better the process nor to improve it.

Westgard itself provides software that is capable of performing statistical simulations and defining the best combination of levels, frequency and control rules for each test. Remember that for some tests, the recommended number of levels is greater than 2.

Thus, manufacturers usually make their controls available with the amount of levels most appropriate for the amplitude of the measurement range and according to the characteristics of the methodology used.

Monitors laboratory requirements and improves operational results.

Videos and Tutorials

Questions and Answers

First Steps to Implementation:

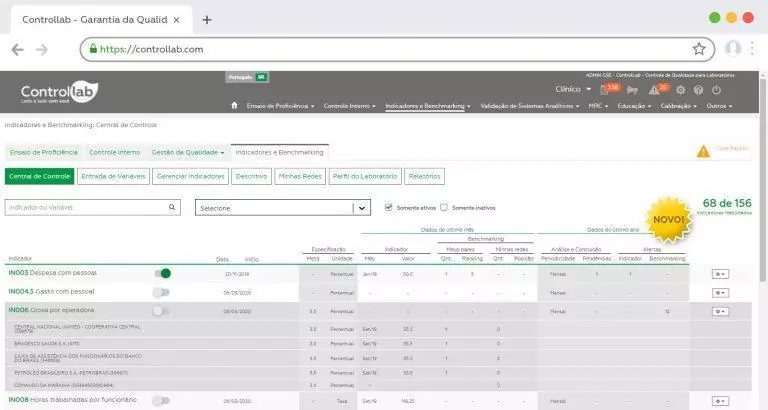

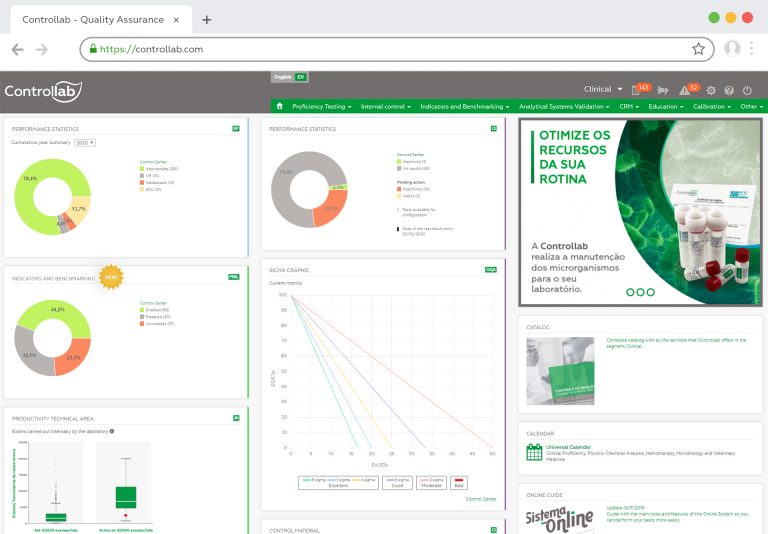

The laboratory indicators are essential elements in the practice of measurement of management and process performance, allowing a comparative evaluation, at a national and international level, of the results of the clinical laboratories in front of their peers and market leaders.

This process of comparing practices and metrics, also known as Benchmarking, enables laboratories to make more effective decisions regarding their strategies and fosters continuous improvement of their processes, contributing to patient safety and the increased productivity and sustainability of the clinical laboratory medicine sector.

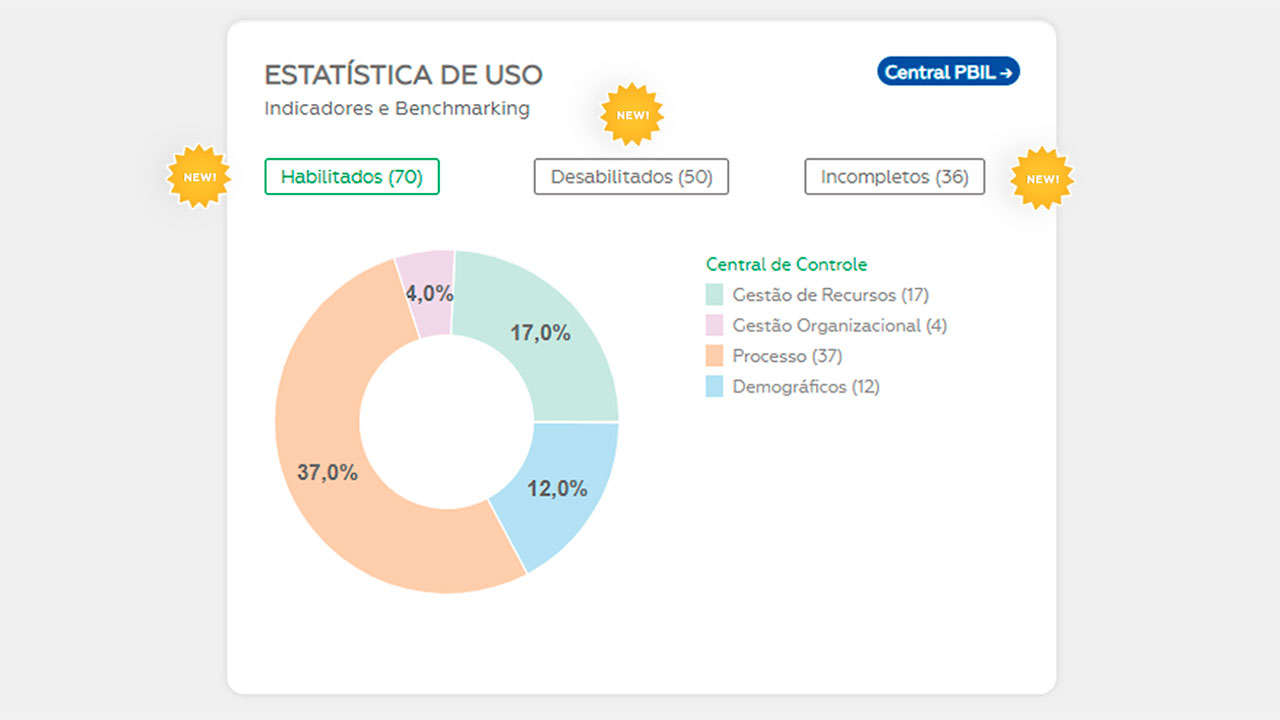

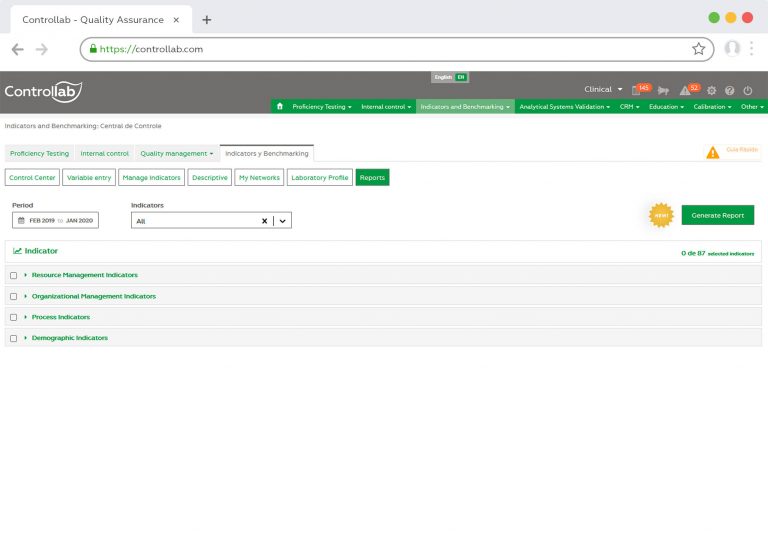

A new user must first define which data the laboratory is already able to monitor and mark this data in the “Manage Indicators” menu. With this selection, the system will automatically display which indicators were enabled to obtain the benchmarking.

In order for the indicators to contribute to improving the quality of health care, it is necessary that laboratory staff involved in the monitored processes have access to data specifications and how they should be collected, especially in the critical aspects of the laboratory process.

If you have any questions, you should contact Controllab.

Operation:

The discussion group is a group of volunteers from a number of different laboratories and is responsible for defining and detailing the indicators, analyzing overall results, clarifying questions and proposing improvements to the program.

Yes. The data is analyzed as a whole without verification from the laboratory that sent us and, for greater security, the laboratories are given an access number so that their name is not exposed in any material. All data available for other laboratories are general data.

Comparison Between Networks (support or brands):

In “My Networks” the user can create their own comparison network for a closed benchmarking. In this tool, he may invite support networks or brand labs to compare their performance with each other.

For the comparison to be generated, network members must respond within the reporting period of the indicators. The member who does not respond to the indicator will not have access to the comparison.

To create a comparison network, the Laboratory Indicator Benchmarking Program participant must access the option “My Network” (Online System > Metricare > Control Center > My Networks > Create New Network).

To invite other labs you will need the name of the institution, the name of the person in charge and the contact email. Once the information is completed and the network has been created, all invited members will receive an activation code to confirm acceptance in the registered emails.

Invited members will receive an activation code in their registered emails. This code must be entered in the Online System > Control Center > My Networks > Enter Code. Once the code is entered and the action confirmed, this user will be a member of the network.

The invited laboratory not participating in the Metricare Laboratory Module should contact our Call Center (+55 21 3891-9900 – atendimento@controllab.com).

Any laboratory participating in the Metricare Laboratory Module. However, the invitation may only be sent and accepted by the user registered as an “administrator” profile in the Online System.

The network is active only when all invited members accept the invitation. If any invitations are still pending, the network status will also be pending. In this case, the institution responsible for the created network may resend the invitation or delete this member. Once the network is activated, the comparison will be generated within the same period as the Program benchmarking release (on the first day of each month).

There are 3 identification options:

- Total: All institutions can be identified in the reports.

- Partial: Only the institution that created the network can identify the others in the reports.

- Locked: No institutions will be identified in the report (minimum 3 institutions required).

During the creation of the network, the user must choose one of these options and, in the invitation to the laboratories, the identification option configured by the responsible institution will be displayed.

Only the institution responsible for the network (institution you created from the comparison network) can manage. However, any member of the group (with the profile “administrator” in the Online System) can leave your comparison network at any time.

From the exclusion date, the indicators will no longer be compared between network members. Once the group is deleted, it cannot be reactivated.

Optimize your routine resources.

Questions and Answers

Strain Control

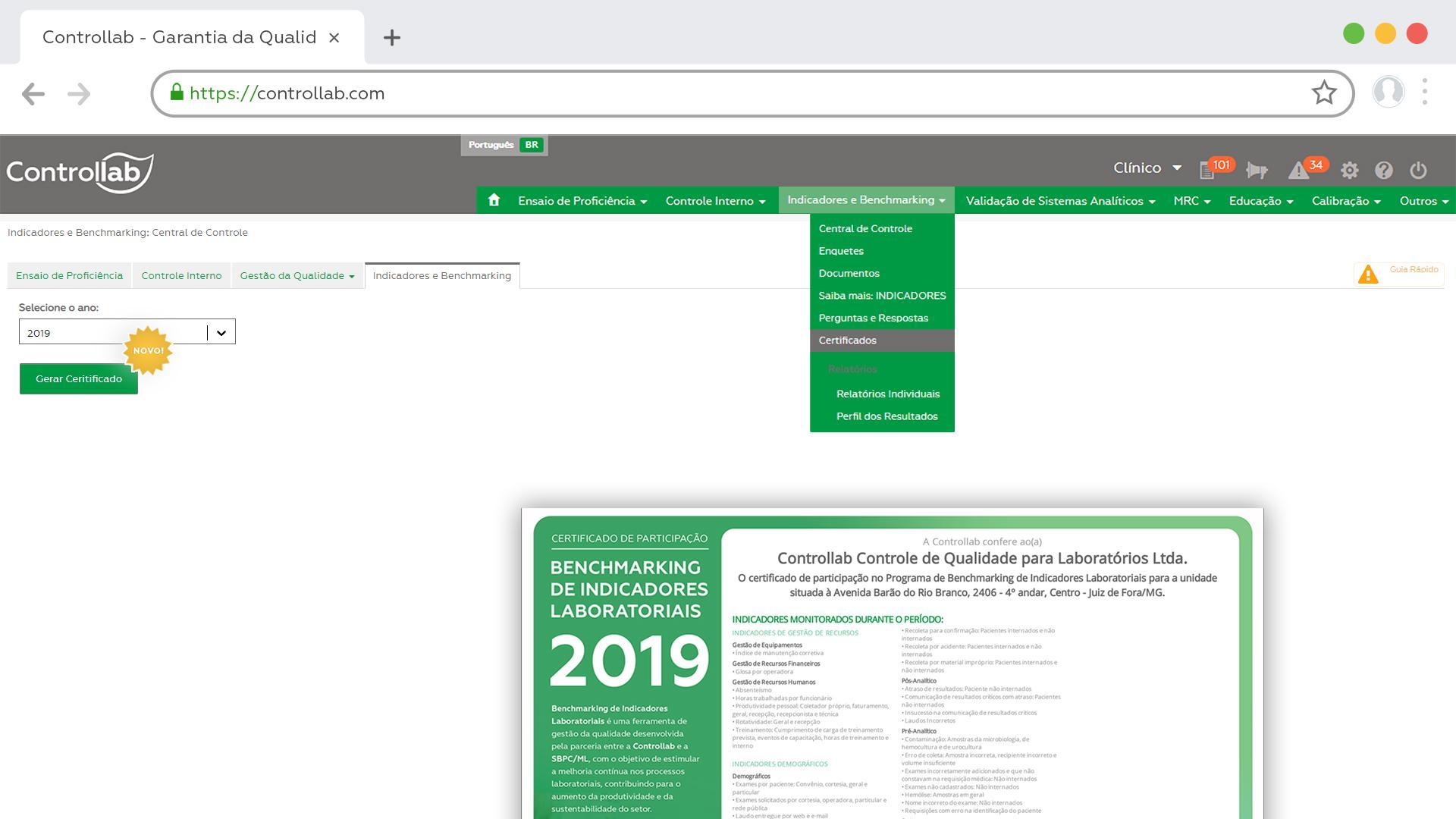

The certificates are made available online. They are digitally signed, ensuring the authenticity of the document.

Users registered for monthly deliveries receive a login and password to access the Online System. In this system, certificates in PDF file are available in the Internal Control menu, while the material lasts. Users who purchase the materials separately, receive the link with the certificate file via email.

Some PDF settings may generate signature invalidity messages or similar. In these cases, we ask you to adjust the PDF settings illustrated in this video.

Insert more accuracy anda traceability to the analytical process.

Questions and Answers

Certified Reference Materials

The certificates are made available online. They are digitally signed, ensuring the authenticity of the document.

Users registered for monthly deliveries receive a login and password to access the Online System. In this system, certificates in PDF file are available in the solution menu, while the material lasts. Users who purchase the materials separately, receive the link with the certificate file via email.

Some PDF settings may generate signature invalidity messages or similar. In these cases, we ask you to adjust the PDF settings illustrated in this video.

Prevents measurement errors in analysis.

Questions and Answers

Calibration of instruments

No. The calibration is a measurement process that informs the actual volume dispensed or contained by a glassware, the actual measurement of a thermometer in relation to that indicated in its graduation etc. In short, it is an “x-ray” of the instrument’s current functioning.

As a result of the calibration, it is possible to decide whether the instrument is suitable for use. Some instruments, when presented with deviation from the expected (error), can be adjusted, provided that the cost of the repair justifies and has mechanisms for the adjustment.

It is up to the user to define the maximum permissible error for the maintenance company to make the adjustment. After adjustment, a new calibration is necessary to check its effectiveness.

There is no “validity” previously defined for a calibration certificate. The user is the one who should define the interval between calibrations to be adopted for each instrument, considering its frequency of use and the results obtained in the last calibrations.

Initially, when there is no instrument history, the use of short deadlines is recommended. This prevents an instrument out of the right conditions impacting a large volume of results for a long time, allowing for faster corrective action and less impact on the routine.

The calibration certificate shows the average obtained by the instrument after successive measurements) and its uncertainty (variation of measurements). The user must verify that the range of results (average ± uncertainty) presented in the certificate is contained in the maximum error allowed by the laboratory (usually called tolerance).

In some instruments, this is possible; however, it is not recommended.

Micropipettes/pipettors usually have an internal lock, which can be violated by the user, by forcing the adjustment to volumes above or below the recommended use range.

However, this practice can initiate a process of loss of adjustment and calibration, in addition to loosening or breaking the thread, causing the instrument to dispense wrong volumes. Great care must also be taken with instruments whose values indicated in the volumetric are multiplied by 10 to read the value dispensed.